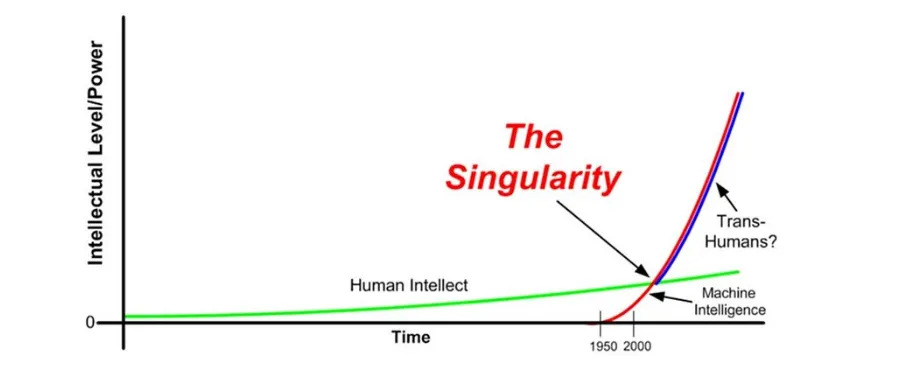

The concept of the "singularity" refers to a hypothetical future event in which artificial intelligence (AI) becomes capable of self-improvement, leading to an exponential increase in intelligence and technological progress. Some proponents of the singularity argue that this event could have significant and even transformative effects on human civilization. However, others are more skeptical about the likelihood of such an event and the potential consequences of it. Here we will examine singularity concept and discuss whether the current strides in AI research are really headed in that direction.

Development of an Idea

The singularity concept was first popularized by mathematician, computer scientist and author Vernor Vinge in his 1993 essay "The Coming Technological Singularity". In this essay, Vinge argued that the rapid advancement of technology, particularly in the field of Artificial Intelligence, could lead to a point where human intelligence becomes surpassed by machine intelligence. This would mark a significant shift in human history and potentially have a profound impact on the future of civilization.

According to Vinge, the singularity could be triggered by the development of a "superintelligence" - an AI system that is capable of recursive self-improvement, meaning that it can improve its own intelligence and capabilities without human intervention. This would lead to an exponential increase in intelligence and technological progress, as the superintelligence would be able to design even more advanced systems and implement them in record time.

Since Vinge's original essay, the concept of the singularity has gained popularity and been discussed by many other researchers and futurists. Some, such as futurist Ray Kurzweil, have even predicted that the singularity could occur as soon as 2045.

It is interesting to note that current implementations of generative AI are so advanced and based on rules and associations that are so complex that the scientists that have created them are still trying to understand the underlying systems that have been produced.

While the concept of the singularity has captured the popular imagination and inspired many AI researchers to strive towards creating ever more advanced artificial intelligence systems, there are also voices within the field who question whether such an event is even possible or desirable.

One argument against the singularity is that there may be fundamental limits to the development of AI that prevent machines from ever surpassing human intelligence. Some researchers have suggested that there may be inherent barriers to creating a truly general AI that can match or exceed human cognitive abilities. For example, cognitive scientist Gary Marcus has argued that human intelligence is based on a suite of interconnected and deeply integrated abilities that may be difficult to replicate in an artificial system. He suggests that AI systems may need to be radically different from human brains in order to achieve superintelligence, which could mean that they are not capable of the same kinds of flexible, creative thinking that humans are.

Trans-humanism

Ray Kurzweil, a well-known futurist and author, is often associated with the concept of the singularity and has proposed the idea of trans-humanism as a potential outcome of the singularity. Kurzweil predicts that the exponential growth of technology and AI will lead to a point where humans will be able to enhance their own biology and intelligence through the use of advanced nanotechnology and brain-computer interfaces. This could lead to a new era of trans-humanism, where humans merge with machines and achieve higher levels of intelligence and longevity.

In his book "The Singularity Is Near," Kurzweil argues that the exponential growth of technology will lead to a point where machines will surpass human intelligence, which he calls the "intelligence explosion." He predicts that this event will occur around 2045 and will bring about radical changes to human society and biology.

However, it is important to note that Kurzweil's predictions are highly speculative, and there is no scientific consensus on whether they are feasible or desirable. Many experts in the fields of AI and biology have raised concerns about the potential risks and ethical implications of trans-humanism and the singularity. The potential risks and ethical concerns associated with trans-humanism and the fusion of biology and technology are numerous and complex.

One key issue is privacy and security. As humans become more integrated with advanced technology, there is the risk that personal information and data could be compromised. For example, if an individual's brain is connected to a network, there is the potential for others to gain access to their thoughts, memories, and emotions. Additionally, there is the risk of security breaches and hacking, which could lead to serious consequences for individuals and society as a whole.

Another concern is inequality and access. There is the possibility that not all individuals will have equal access to these advanced technologies, leading to a new form of social inequality. If only a select few individuals have access to brain-computer interfaces or advanced nanotechnology, they may be able to achieve greater success and advantages than those without these enhancements. This could exacerbate existing social and economic inequalities.

There is also the risk of unintended consequences. As with any new technology, there is the risk of unintended consequences. For example, if humans are able to enhance their intelligence through advanced technology, this could lead to a new form of cognitive elitism or even discrimination against those without enhancements. Additionally, there is the risk that the enhancement technology itself could have negative consequences, such as unintended side effects or unforeseen risks.

Finally, there are a number of ethical considerations that must be taken into account when considering the potential benefits and drawbacks of trans-humanism and the fusion of biology and technology. Some scholars have argued that enhancing human biology and intelligence is inherently wrong, while others argue that it is a necessary step for human progress. Additionally, there are concerns about the potential impact on personal identity, autonomy, and dignity. These concerns need to be addressed in order to ensure that any advancements in trans-humanism are made in an ethical and responsible manner.

Serious Concerns

In spite of all of this the push and competitive fever to continue to push the boundaries of AI continues. At this rate can the inevitable be held back? Hearing the news today it seems like it is more of a question of when, not whether, we will be able to create a superintelligence. A concern that seems to be at the back of everyone’s mind is what might be unintended consequences or risks associated with doing so. One worry is that a superintelligence could become uncontrollable or even hostile to humanity, leading to catastrophic outcomes. Philosopher Nick Bostrom has famously referred to this possibility as the "control problem," noting that as AI systems become more advanced and autonomous, it may become increasingly difficult for humans to maintain control over their actions and decisions. This could lead to a scenario where a superintelligence decides to pursue goals that are at odds with human values, leading to disastrous consequences.

These ideas of out of control AI systems have been explored in numerous books, movies, and TV shows, such as "The Matrix," "Ex Machina," and "Black Mirror." These works often depict the singularity as a transformative and potentially dangerous event that could have profound implications for humanity. The popularity of this concept reflects both our fascination with technology and our anxieties about its potential impact.

If AI does not destroy us there are still additional concerns about the potential impacts of a superintelligence on society and the economy. Some experts have predicted that the development of superintelligent AI could lead to massive job displacement and economic disruption, as machines become capable of performing tasks that were previously done by humans. This could exacerbate existing social and economic inequalities and pose significant challenges for policymakers and governments.

In light of these concerns, some researchers and experts have called for a more cautious approach to the development of AI, emphasizing the importance of ensuring that any superintelligent systems we create are aligned with human values and goals. This may involve designing AI systems that are explicitly programmed to prioritize human welfare and well-being, as well as creating systems that are transparent and explainable, so that humans can understand and monitor their behavior.

Ultimately, whether or not the singularity is a likely or desirable event remains a matter of debate and speculation within the AI community. While there is no doubt that AI technology is advancing rapidly and has the potential to revolutionize many aspects of our lives, it is important to consider the potential risks and downsides of creating a superintelligence and to take steps to mitigate these risks as we continue to push the boundaries of what is possible with AI.

Is a Singularity Inevitable?

So, are the current strides in AI really headed towards the singularity? Based on current research, it seems that we are still a long way off from developing a superintelligence. While AI has made significant advances in recent years, particularly in the field of machine learning and deep learning, these systems are still narrow in their capabilities and require significant human input and oversight.

Developing a superintelligence is a complex and challenging task that requires AI researchers to overcome a number of significant obstacles. One of the key challenges is developing a system that is capable of recursive self-improvement, which means creating an AI that is able to improve its own algorithms and capabilities without human intervention. This is a difficult problem because it requires creating an AI that is not only highly intelligent but also highly creative and able to think abstractly. Additionally, creating an AI that is capable of recursive self-improvement raises concerns about control and safety, since a system that can improve itself rapidly and autonomously may be difficult to control or predict.

Another challenge in developing a superintelligence, as mentioned earlier, is ensuring that the system remains aligned with human values and goals. As AI systems become more intelligent and autonomous, it may become increasingly difficult for humans to control their behavior or ensure that they are acting in accordance with human values. To address these concerns, some researchers are working on developing "friendly AI" systems that are designed to be aligned with human values and goals. These systems are designed to prioritize human welfare and well-being, and to ensure that they act in ways that are beneficial to humans. However, developing friendly AI systems is a challenging and complex task that is still in its early stages. One of the main challenges is determining what human values and goals should be prioritized, and how these values can be incorporated into an AI system in a meaningful way. Additionally, there are concerns that even well-intentioned friendly AI systems may still pose risks or unintended consequences, since it is difficult to predict how such systems will behave as they become more intelligent and autonomous.

Risks of a SuperIntelligent AI

Supposing we were able to develop a superintelligence, it is difficult to predict the long-term consequences of such a development. While some proponents of the singularity argue that it could lead to a utopian future in which all human problems are solved, others are more skeptical and warn of the potential risks and downsides.

One of the most significant concerns regarding superintelligence is the possibility of a "control problem". As discussed earlier, a superintelligent AI system could become uncontrollable and act against human interests. This problem is often referred to as the "alignment problem" and refers to the challenge of ensuring that a superintelligence remains aligned with human values and goals. Some researchers have suggested that solving the alignment problem may be impossible and that the risks associated with creating a superintelligence are simply too high.

Other potential risks associated with superintelligence include job displacement and economic disruption. As machines become more intelligent and capable, they may replace humans in many jobs, leading to widespread unemployment and economic upheaval. This could have significant social and political consequences, and it is unclear how society would cope with such a disruption.

Finally, there are also ethical and moral implications of creating intelligent machines. As machines become more advanced, they may develop their own consciousness, leading to difficult questions about their rights and status as beings. Some researchers have argued that we have a moral responsibility to ensure that intelligent machines are treated ethically and that their rights are protected.

The Importance of Oversight

As the possibility of a superintelligence becomes increasingly likely, it is important for governments and researchers to think carefully about the steps they should take to ensure the safe development and deployment of AI.

One important step is to invest in research on AI safety and alignment. This could involve funding research into the development of "friendly AI" systems and the creation of tools and techniques for ensuring that AI remains aligned with human values and goals. Governments could also invest in research on the social and economic implications of AI and work to develop policies and regulations that mitigate potential risks.

Another important step is to promote transparency and openness in the development of AI. This could involve encouraging researchers and companies to share their research and data, as well as working to ensure that AI systems are designed in such a way that their decision-making processes are transparent and understandable to humans.

Governments could also work to create international norms and regulations around the development and deployment of AI. This could involve convening international conferences and working groups to develop guidelines for the responsible use of AI and to address potential risks and downsides.

Finally, it is important for governments and researchers to engage in open and transparent dialogue with the public about the potential risks and benefits of AI. This could involve educating the public about the capabilities and limitations of AI, as well as working to build trust and understanding around the development and deployment of these technologies.

Overall, the development of a superintelligence is a complex and multifaceted issue, and it is important for governments and researchers to take a thoughtful and proactive approach to ensure that these technologies are developed in a safe and responsible manner. By investing in research, promoting transparency and openness, creating international norms and regulations, and engaging in open dialogue with the public, we can help to mitigate the potential risks and realize the many benefits that AI has to offer.

Conclusion

In conclusion, the singularity is a hypothetical event in which artificial intelligence surpasses human intelligence, potentially leading to a dramatic transformation of society and perhaps human themselves. While some experts predict a utopian future in which AI solves all human problems, others warn of the potential risks and downsides, such as the possibility of an uncontrollable and hostile superintelligence, job displacement and economic disruption, and ethical and moral implications.

The development of a superintelligence is a complex and multifaceted issue that requires a thoughtful and proactive approach. Governments and researchers should invest in research on AI safety and alignment, promote transparency and openness in the development of AI, create international norms and regulations around the development and deployment of AI, and engage in open dialogue with the public about the potential risks and benefits of AI.

To mitigate potential risks, researchers are working on developing "friendly AI" systems that are designed to be aligned with human values and goals, although these efforts are still in their early stages. The development of such systems could be aided by the creation of tools and techniques for ensuring that AI remains aligned with human values and goals.

Governments and researchers could also work to create policies and regulations that mitigate potential risks and address potential downsides, while also investing in research on the social and economic implications of AI. Additionally, promoting transparency and openness in the development of AI could build trust and understanding around these technologies. Finally, engaging in open dialogue with the public is critical to building trust and understanding around the development and deployment of AI. Educating the public about the capabilities and limitations of AI, as well as its potential risks and benefits, could help to build support for responsible AI development.

Overall, the development of a superintelligence is a significant challenge that requires a comprehensive approach. By investing in research, promoting transparency and openness, creating international norms and regulations, and engaging in open dialogue with the public, we can help to mitigate the potential risks and realize the many benefits that AI has to offer.

References

- Vinge, V. (1993). The coming technological singularity: How to survive in the post-human era. Whole Earth Review, 81, 89-95.

- Kurzweil, R. (2005). The Singularity is Near: When Humans Transcend Biology. Penguin.

- Russell, S. J., & Norvig, P. (2010). Artificial Intelligence: A Modern Approach (3rd ed.). Prentice Hall.

- Bostrom, N. (2014). Superintelligence: Paths, Dangers, Strategies. Oxford University Press.

- Yudkowsky, E. (2008). Artificial Intelligence as a Positive and Negative Factor in Global Risk. In Global Catastrophic Risks, ed. N. Bostrom and M. M. Cirkovic, Oxford University Press, 308-345.

- Amodei, D., Olah, C., Steinhardt, J., Christiano, P., Schulman, J., & Mané, D. (2016). Concrete problems in AI safety. arXiv preprint arXiv:1606.06565.

- Chalmers, D. J. (2010). The Singularity: A Philosophical Analysis. Journal of Consciousness Studies, 17(9-10), 7-65.

- Goertzel, B. (2014). The Need for Friendly AI. Journal of Consciousness Studies, 21(3-4), 146-161.

- Marcus, G. (2018). Deep Learning: A Critical Appraisal. arXiv preprint arXiv:1801.00631.

- Brynjolfsson, E., & McAfee, A. (2014). The second machine age: Work, progress, and prosperity in a time of brilliant technologies. W. W. Norton & Company.

- Bostrom, N. (2003). Ethical issues in advanced artificial intelligence. Cognitive, Emotive and Ethical Aspects of Decision Making in Humans and in Artificial Intelligence, 12-17.

- Tegmark, M. (2017). Life 3.0: Being human in the age of artificial intelligence. Alfred A. Knopf.

- Sotala, K., & Yampolskiy, R. V. (2015). Responses to Catastrophic AGI Risk: A Survey. Physica Scripta, 90(1), 018001.

- National Science and Technology Council, Committee on Technology. (2016). Preparing for the future of artificial intelligence. The White House.

- Grabara, Janusz. (2019). Health and Safety Management in the Aspects of Singularity and Human Factor. MATEC Web of Conferences. 290. 12014. 10.1051/matecconf/201929012014.