Bits, Bytes & Pieces

Post of the month: sep 2023

Welcome!

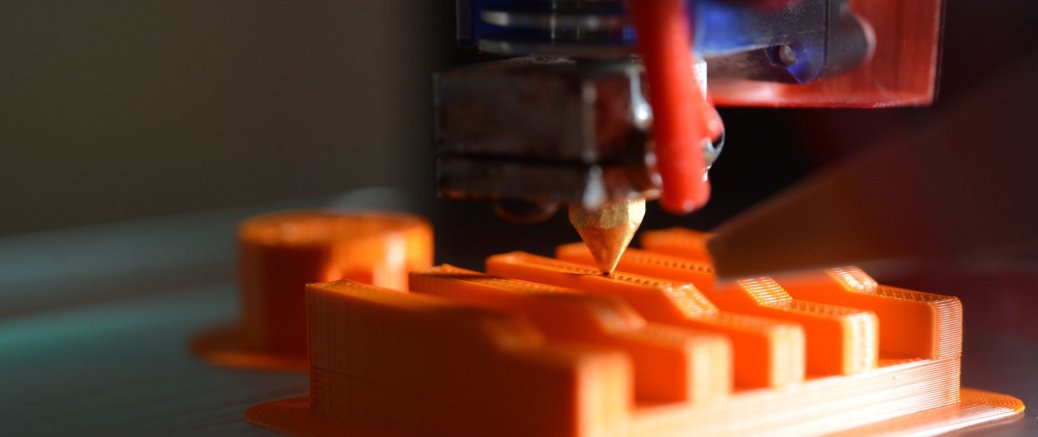

Let's embark on a thrilling exploration where programming, digital electronics, web design, and the world of Making come together. At Bits, Bytes & Pieces, we're not just discussing the tech world; we're navigating it, learning its quirks and nuances, one byte at a time. From the wonders of 3D printing to the fusion of electronics and mechanical systems, we appreciate the craftsmanship behind every creation.

I'm here, perhaps like you, as an informed amateur. Passionate, curious, and eager to demystify not just the realms of code and circuits, but also the artistry that blends the digital and tangible. As I delve into projects – be they digital explorations or tactile creations, those that soar or stumble – I share every insight, hiccup, and triumph with you.

This isn't just a blog; it's a chronicle of a journey. A space where we can celebrate the wins, learn from the missteps, and perpetually evolve. Whether you're taking your first steps in the digital realm, crafting with hands and tools, or have tread this multifaceted path for a while, there's a story here that resonates with you.

Bits, Bytes & Pieces: Where development, design, electronics, and the world of Making come together. Explore, experiment, and evolve.

Home

In the ever-evolving world of technology, few advancements have captured our collective imagination quite like the meteoric rise of generative AI. Especially with behemoths like OpenAI's GPT series and Google's Bard leading the charge, the landscape of what machines can achieve has been redrawn. Among the most tantalizing feats? Their ability to conjure up code snippets, rectify pesky bugs, and even draft more extensive coding sequences from the merest hint of a prompt. The allure is undeniable: imagine a world of streamlined efficiency, diminished human error, and coding knowledge that isn’t locked behind the formidable gates of complex jargon and years of experience.

Introduction

In recent times, the digital landscape has witnessed a fascinating trend: the rise of no-code web and application development. Think of it as DIY for the digital realm! Gone are the days when the only way to build a website or an app was by getting tangled in lines of code or hiring a professional developer. No-code platforms now offer the promise of creating digital wonders with a simple drag-and-drop action. In this essay, we'll dive deep into the world of no-code, unraveling its perks and pitfalls, looking into the major players in this field, discussing costs, and figuring out just how steep (or gentle) the learning curve is. Additionally, we'll look at some inspiring success stories and insights from the critics.

The concept of the "singularity" refers to a hypothetical future event in which artificial intelligence (AI) becomes capable of self-improvement, leading to an exponential increase in intelligence and technological progress. Some proponents of the singularity argue that this event could have significant and even transformative effects on human civilization. However, others are more skeptical about the likelihood of such an event and the potential consequences of it. Here we will examine singularity concept and discuss whether the current strides in AI research are really headed in that direction.

Introduction

Python is a popular high-level programming language that is widely used in various fields such as web development, data science, machine learning, artificial intelligence, and scientific computing. It was created in the late 1980s and is known for its simple syntax and readability, making it easy to learn and use. Python is an interpreted language, meaning that the code is executed line by line at runtime, which allows for rapid development and prototyping. It also has a vast collection of third-party libraries and frameworks that make it easy to accomplish complex tasks with minimal coding effort. Some of the popular libraries and frameworks include NumPy, Pandas, Django, Flask, TensorFlow, and PyTorch. Python is also known for its versatility and can be used for a wide range of applications, from building web applications to analyzing large datasets and creating machine learning models.